Learnings deploying sitespeed.io online using Docker

17 July 2015

Early this year I was really lucky and got sponsored by The Swedish Internet Foundation to make it easier to use sitespeed.io (it's great that they support Open Source!). If you don't know about sitespeed.io: It's an Open Source web performance tool that test a site against web performance best practice rules and measure how fast the page is using the Navigation Timing API.

I took some time off from work and worked 100% on the online version. And now a couple of months late, you can test it yourself at https://run.sitespeed.io. It's in beta and still needs some love but you can test a page and that's pretty nice.

I've put down a lot of work to make this happen and wanted to share a couple of things that I've learned. But first lets check the setup.

The stack

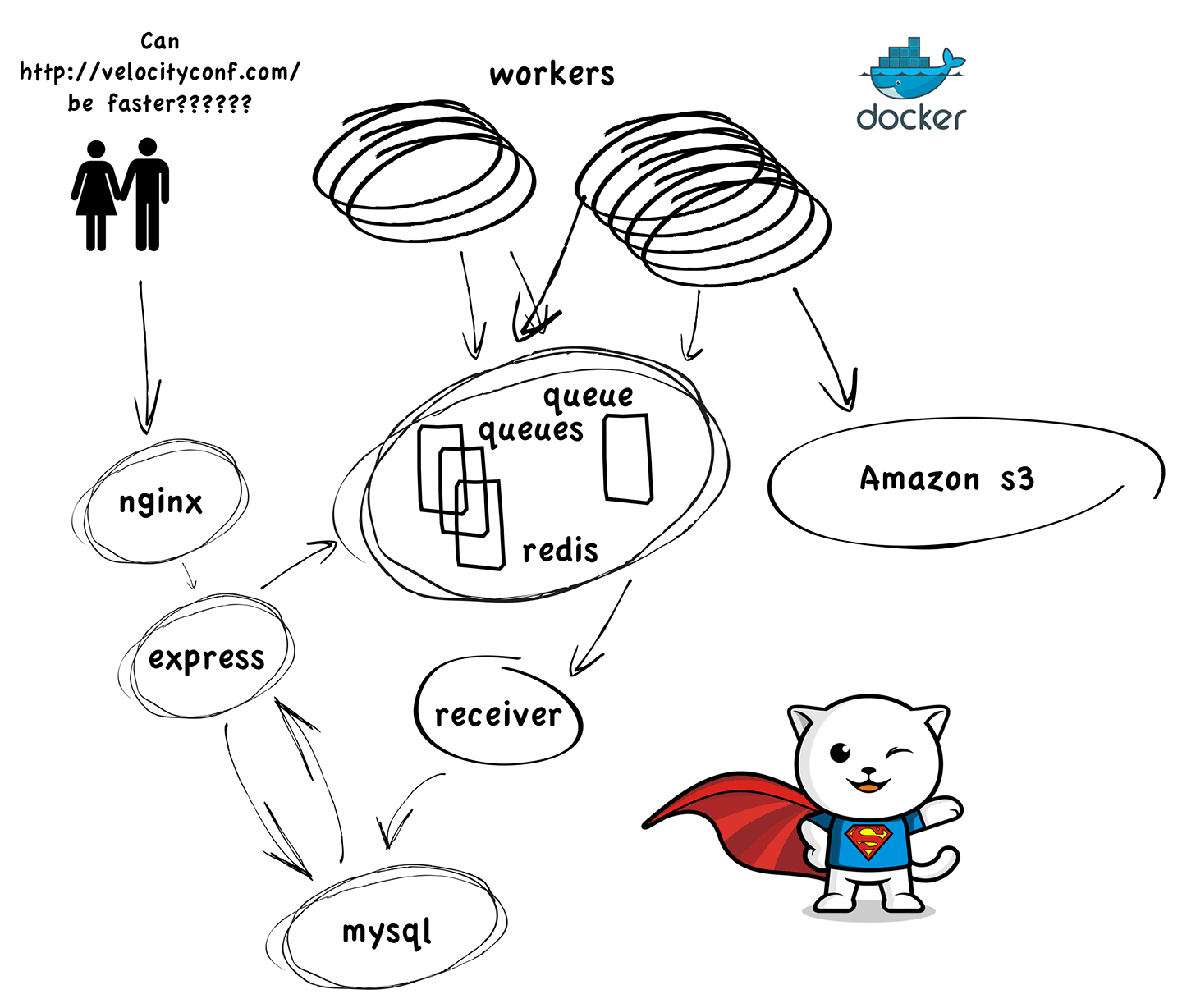

There are three components specific for the online version. The server that serves the pages to the user, the workers that analyze the pages and the receiver that receives status messaged from the workers. Then we use standard components:

- Node JS and Express - I use node because I'm kind of found of it since I moved sitespeed.io to node.

- MySQL - Why do I use MySQL? Well emotional reason. When I used to work at Spray early 2000 and we changed from Oracle to MySQL, I got a warm fuzzy feeling. I still get that feeling when I think about MySQL. That's why :)

- Redis - we use Redis as a queue between the workers and the receiver.

- nginx - love that it's so easy to configure nginx

- sitespeed.io - it's no surprise, I use sitespeed.io to analyze a site

The whole thing looks something like this.

From a user view

You can add a URL that you want to analyze and choose a couple of configurations. If you used sitespeed.io you know there's a lot of configuration, sometimes it's too configurable even for me that has built it :)

The configuration in the online version is stripped down, I wanted it to be super easy to start a test and do it on a mobile. Will probably add more possibilities to configurations later.

Right now analyzing a site is limited to one URL per run, tested one time. Yep I know, that's against everything we usually teach with sitespeed.io: Test all the URL:s of your site and measure the timings many many times. The problem is that it takes long time to analyze many pages and that means we need many workers to make it fast for multiple users. When/if we find some sponsors we will add more workers and then we can increase the number of URL:s per run.

It's boring waiting on tests to finish so we wanted to make the waiting time fun. You will see random cats from the CAT API. Awesome! If you are into dogs instead, show me the way to a dog API and I'll implement that too.

You will also get some tips about web performance and the best part of it is that you can yourself share your tips by sending a pull request to the project.

When the test is finished you get an overall score and then you can choose to download the result (the same HTML files generated by sitespeed.io) or view them.

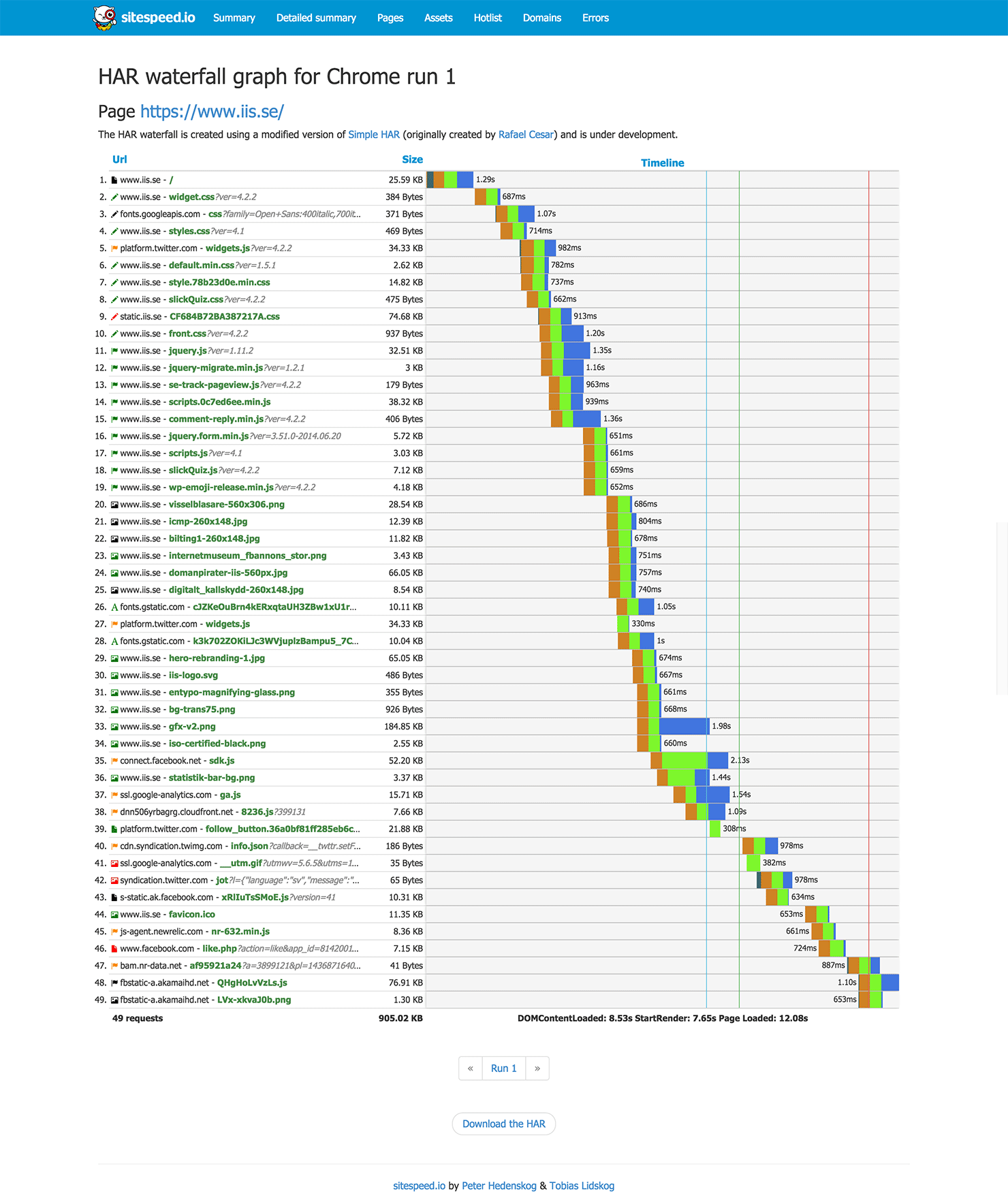

New in the latest version of sitespeed.io and the online version is that we finally can see the HAR files. We use a modified version of SimpleHar with some extra features. The colors match WebPageTest HAR:s. One new thing is that the name of each response has a color. If it is yellow or red, it is something you should look into. It could be things like missing cache headers or images that probably could be made smaller. We will add more rules later to make it easier to spot interesting assets.

Learnings

I haven't done so much work with Docker before so most of the learnings are from the Docker world.

Docker Docker Docker

I deploy everything using Docker containers because it's super fast and simple. I love it! Docker makes it easy to test things locally and knowing it works in production, change hosting and add new workers on new locations in the world. I'm not sponsored by Docker by the way :)

What's been cool with building the online version is that I've got the opportunity to Dockerize sitespeed. I've used Docker before but only ready made containers.

The size of the container

One problem for me has been to make the containers small. If you have Firefox, Chrome and sitespeed.io in the same container it's reaching might 1 gb, and that's large if you want to download it quick. There are tricks to keep it small (remove build tools, make fewer Docker layers), but if you got many dependencies it will still be large.

To try to minimize the size, there's four different containers that can analyze a web page (all four has sitespeed.io): One standalone with only sitespeed.io, one with Chrome, one Firefox and one with both Chrome and Firefox. You can use these yourself if you want to have a clean environment when you run your test (we have had over 35000 downloads on the main container with both Firefox and Chrome version, that's pretty cool).

One problem has been to keep up with browser version. It happens now and then that a new browser version breaks Selenium (we use Selenium to drive the browsers). It has happened both for Chrome and Firefox the last years. That's why it is super important to keep track of the exact version of the browser that we use (it must match the Selenium version). That's why our base containers (a Docker container can extend/be built upon another container) contain a specific browser version. We have three different base containers: Firefox, Chrome and Firefox and Chrome

Then the sitespeed.io containers are built on top of specific tagged versions of the browser. It adds some extra work: When a new browser version is released, I need to build a new browser container, change the dependency in sitespeed.io, test it and then release the new containers. But it is perfectly safe.

Docker and tags

There are two things I learned about Docker and tags:

- Always tag your container with the version number and always pull and run specific tags (not use latest) so that you are sure which exact version of the software you are using.

- Building your container online (at the Docker hub using the automated build) is good because it will checkout your code from Github and build on a clean environment. What's not perfect is the tagging when you use the automated build. When I make a new version of a container, I want to tag it as the latest and the version number. That's not doable through the web interface; you can only set one tag per build. My workflow when I build at the Docker hub is that I built the same container twice: one with the tag latest and one with the specific tag. If I build via command line it's possible to add multiple tags but not at the hub.

Docker and logs

You can get the logs from your container using docker logs id but I think best way is to always let the log files inside the container live outside container on the real server by mounting it as a volume. That means you probably need to have many parameters when you start your container, mounting each and every log file. Or if you can: make your container log the log files to one directory and map that one.

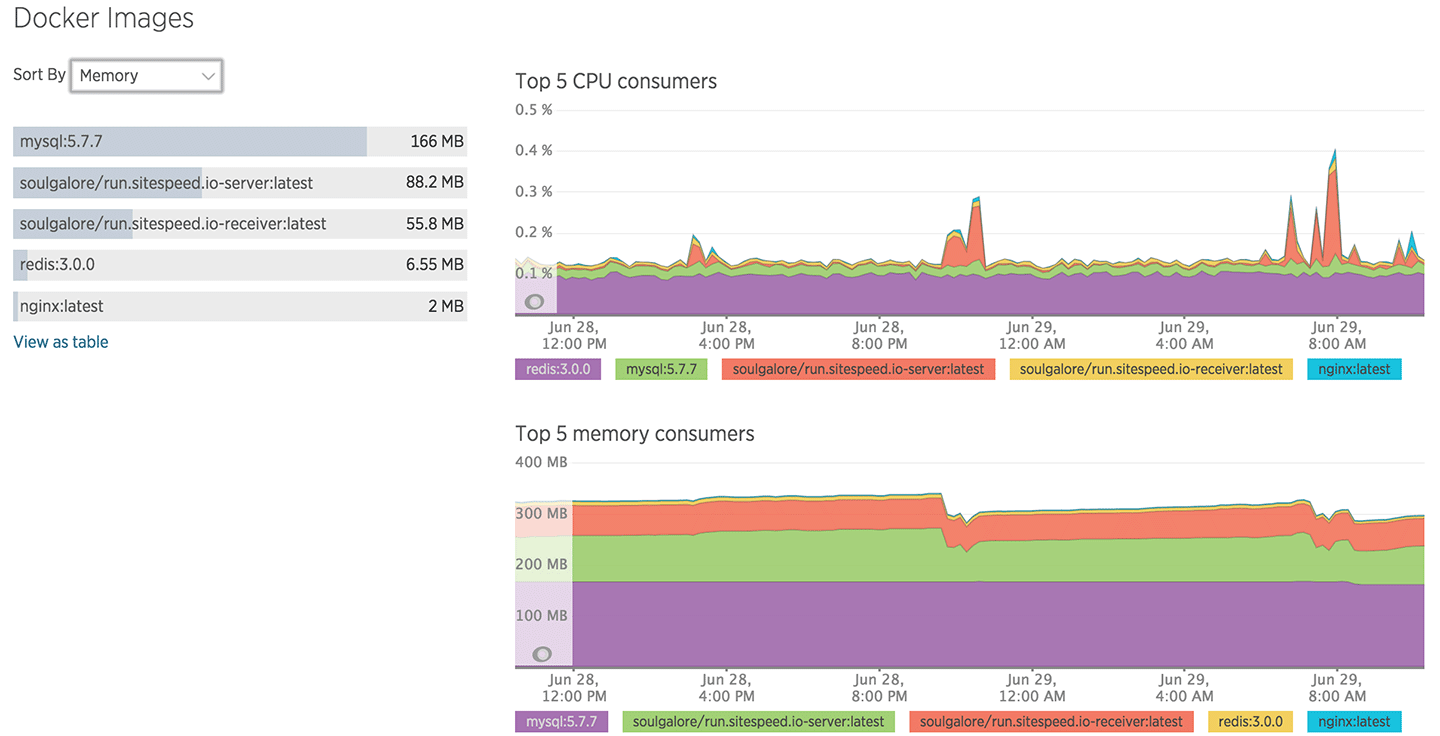

Keep track of Docker containers

If your run multiple containers on your server, how do you keep track of them? Which container uses most memory and CPU? I use New Relic, the containers will be picked up as long as you add the New Relic user in the Docker group. That's cool and makes it possible to see things on a container level.

This is new in New Relic and I miss Docker support in the IOS application and the alerts functionality. Would be cool to get alerts on a container level instead of server level.

Docker in Docker

The worker container (that analyze the pages) runs sitespeed.io inside another container. Yes, we score 100% coolness in running Docker in Docker :) However, it's good to know that it will not work out of the box, you should follow the instructions https://github.com/jpetazzo/dind to make it work.

It's worth knowing that New Relic will not pickup Docker containers running inside Docker.

Docker and disk space

If you use Docker you know downloading and running containers takes space. I haven't fully figured out the best way to remove all data from old running containers (that doesn't happen automatically).

Today I use the remove docker-cleanup-volumes.sh. But I think there's a bug somewhere because sometimes the old not running containers can take up space, even though I removed them. Would love if someone could help me with best practice on how to handle removing old data.

Docker on Mac OS X

Docker works well on Mac but there's two things that's good to know. I've move around quite much and switch between different wifi:s and each time I use a new internet connection I need to close and re-open boot2docker (the help program you need to run Docker on Mac) to get it to work. Annoying since the error you get without restarting says nothing about how you should solve it.

The other problem happened when I upgraded the latest release 1.7. It happend the day I was suppose to release the first beta version. I know: it's stupid to always test out the latest releases. What happens now on my Mac is that I need to destroy and re-init boot2docker every time you restart to get rid of an certificate bug. The thing is that when you delete, all your downloaded containers gets removed so they needs to be downloaded again. But wait, a couple of days ago the 1.7.1 was released and it seems to be fixed. :)

Running Chrome and Firefox on Linux

We use Selenium driving the browsers. That works spotless on Mac OS X but the drivers on Linux isn't 100% stable. It happens that it breaks. That's been quite a big problem because we use the Selenium NodeJS API and there's some rooms for improvement (the docs are sometimes wrong and it is easy to end up in async hell). Tobias is working on a promise-based version that will be released later this year.

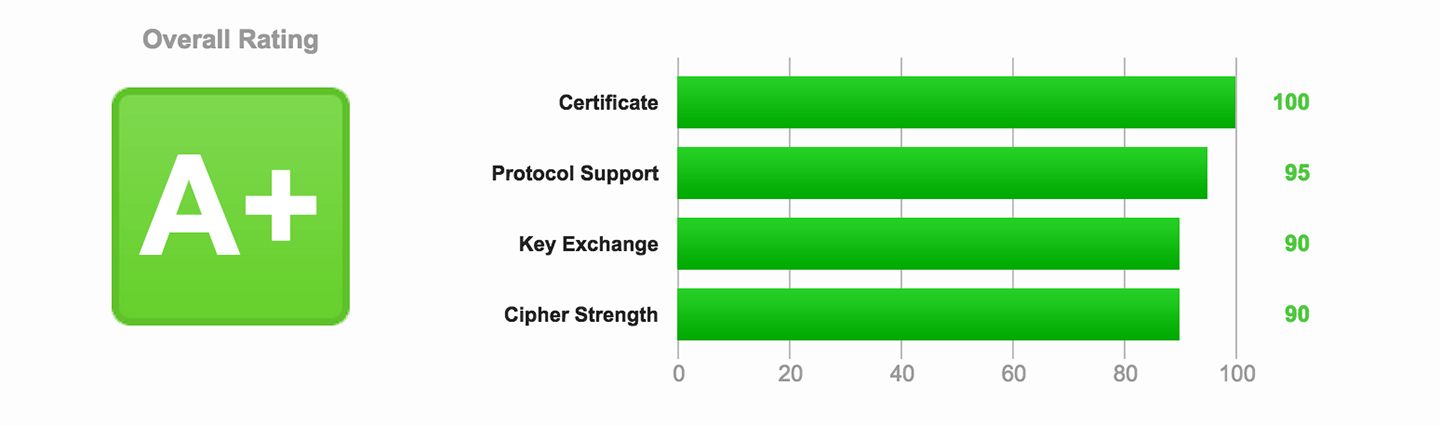

Setting up TLS

Everything must be secure! Releasing a new service it needs to be secure. And when you add TLS to your server, you want to make sure you do it right. Mozilla has a great wiki page on how to do it.You need to test your setup and you do it with Qualys SSL Labs. The test will grade your setup from F to A. Here's sitespeed.io: grade:

It's surprisingly many sites that has SSL but not ace the setup, making it less secure than it needs to be. If you use the latest NginX Docker container and follow the Mozillas instructions, you will get a A+!

I bought my certificate from NameCheap, that worked fine, but I didn't know about SSLMate at that time (it looks amazing). However, soon https://letsencrypt.org/ will be live and then everyone can use SSL for free. Hooray! :)

We use two subdomains for the online service, one is for the result pages that is hosted at S3. I use Cloudflare in front to make that domain kind of secure. That's much cheaper than adding SSL support for S3.

Keep track what's happening using Slack

Tobias and me use Slack to keep track of everything. Even though we are only a two-member team, it's a great way to know what's happening. All code is on Github and built by Travis-ci and they both report to Slack when something happens.

We also use the New Relic integration (no support for specific Docker containers, we can only get alerts for the whole server setup). FYI the Slack integration in New Relic doesn't work out of the box; make sure to choose webhooks instead of the Slack integration.

Building containers on the Docker hub can take time. There's no out of the box integration to Slack, but you can add a webhook and use slack-docker-hub-integration to send it to your Slack channel. It's really nice to get a notice when your build is ready. But hey, if the build fails you don't get notified, what's up with that?

We also use Slack to keep track on how the application is doing by sending all error messages from the logs to Slack. We use Winston-Slack. That's super helpful because we keep track of all tests that are failing and if we have some problems in the application.

One thing though that is not perfect: We run everything inside Docker and act on the logs inside the container. But if something happens on the server with Docker, we don't catch that (that has happend on the dashboard server). We need to add something to keep track of Docker and report if it starts failing.

Digital Ocean

We deploy the application at Digital Ocean. I really like it because it's super easy to add and start a new server. There's two things though that is important to know:

- A default droplet (your instance) doesn't have a swap setup, so for every instance you create you need to setup swap. That's easy but since you need to do it for every instance it would be nice if it happened out of the box.

- It's easy to run out of money! Even though instances is cheap, it so easy to create a new droplet and test something out, the money disappear faster than you can say Digital Ocean.

Learnings part 2?

Yes, there will be a part 2 later when the site has been running for a while. In the meantime; checkout the code or test your site!

Written by: Peter Hedenskog